Business in a world of reasoning AI

AI is now thinking “fast and slow”, redefining software, SaaS, and the future for the rest of us.

A professional lamplighter climbing a ladder to ignite a gas lamp in early 20th-century Paris (Recraft V3)

“there is no wall” - On November 13, 2024 Sam Altman hinted at what was about to come. OpenAI once again changed the course of history with their introduction of reasoning models, reaching PhD level performance on complex math questions. Many reports like to talk about “walls” but in this context, I would like to use the analogy of electricity. With electricity, we lived through the Benjamin Franklin times and theories of the 18th and 19th century (Faraday, Morse, etc.) and in the 1880s the light bulb together with direct current (DC) were first popularized. DC was groundbreaking at the time, signaling the dawn of a new electric era, but the initial technology had serious limits for distributing power over long distances. Only years later, the adoption of alternating current (AC) through Westinghouse and Tesla, truly enabled the electrification of the world, powering entire cities at a time. We might see a similar pattern with AI: We lived through the times of theories of Turing, McCarthy, Schmidhuber & co. and in the 2010s arrived in the intelligence age with the introduction of the transformer. But only within the last months we realized that our current way of scaling pre-training might be similar to DC. Ilya Sutskever said at NeurIPS a couple weeks back that “pre-training as we know it will unquestionably end,” while at the same time signaling that a new paradigm of scaling test-time compute has begun. Introduced through o1 and now o3, we have “reasoning models” which unlock massive opportunities in scaling intelligence on a new dimension. Potentially these reasoning models, like AC, can handle challenges DC never could, changing our current understanding of AI.

In this post I want to take a stab at giving some predictions of what the introduction of reasoning models might do to the way we work. In the first part of this post, I explore how new reasoning models may commoditize software, reshape engineering teams, and lead to a vast market consolidation, with data, regulation, and compute acting as the central constraints. In the second part of the post, I look beyond software to see what AI more capable than humans might hold in store for knowledge workers, with new modalities like voice and video challenging today’s human advantages, potentially turning many professionals into modern-day lamplighters.

Commoditization of Software

With o3 reaching 70% on SWE bench, ranking 175th in the world in competitive programming and companies like Cursor, Cognition, and Lovable reaching staggering amounts of traction, the statement “AI is eating software” only gets further affirmation. Up until now robustness and context windows were the biggest bottlenecks and even o1 still struggles with large code repositories and edge cases. 2025 and the new line-up of reasoning models will give us clarity whether code generation and maintenance is similar to the promise of self-driving cars, which took several years to get to a level of full automation, or whether we are truly about to witness an unprecedented level of AI automation in production.

If we assume that reasoning models will excel at writing, maintaining and debugging production software at or above human expert level, these could be some of the implications:

Workforce cuts are imminent.

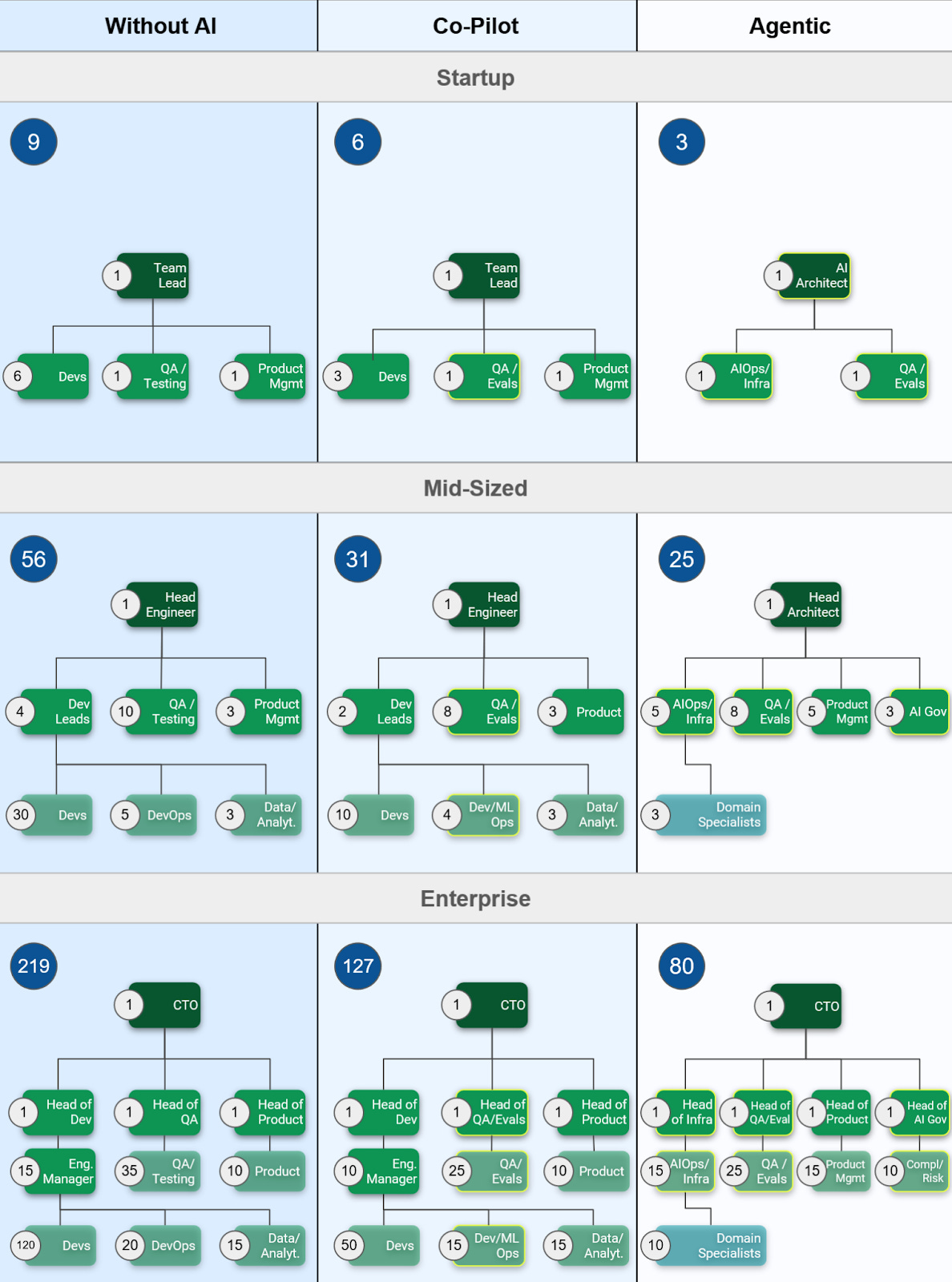

Even in a world without the new paradigm of reasoning models, software engineers and researchers report drastic improvements in efficiency through AI. Anthropic and OpenAI continually impress developers with their capabilities and the need for non-AI engineers has decreased significantly, with some going as far as recommending CS majors to drop out or declaring “Winter is coming for software engineering”. But before encouraging major career shifts, I want to look at how such a transition would actually unfold in an organisation. Below I put together a diagram of how a software engineering team might evolve from no availability of AI tools to “Co-Pilot” usage, our status quo, where AI leads to massive productivity gains but needs close supervision at every step of the way. And lastly, we imagine a fully agentic world, potentially arriving with new reasoning models in 2025, in which AI models become capable enough to create, maintain and verify their own code. In this world the human takes the role of an architect/product manager, as well as the role of a quality assurance/compliance manager whereas writing or debugging code becomes an infrequent responsibility. For illustrative purposes, I drew up some imaginary company orgcharts below. Especially junior and generalist developers may be affected, while roles in AIOps, evaluations, and AI governance/compliance could see headcount increases. In my scenarios, headcounts drop by an average of 40% when moving from a non-AI to a co-pilot world, and then by another 35% in a fully agentic world.

The above diagram is a best guess on how AI might transform the software function of a business at different dimensions given steady output. The diagram is oversimplified and does not accurately depict any real company.

SaaS is dead

Echoing what Satya Nadella said a couple of weeks ago, software will become replicable and drastically reduce in value. Everyone who once activated software IP at the cost of development years ago, can prepare to depreciate their IP assets to the compute cost it would take to replicate it. Whenever something becomes commoditized, prices drop and usage spikes, similarly whenever something becomes commoditized, the source does not matter anymore, it does not matter to your phone if it is charged with electricity stemming from coal or nuclear. Your software will not care whether it was written by artificial intelligence or human intelligence, your CFO however, will.

With access to enough intelligence anyone could build any software desirable in a matter of minutes. In the coming months we might move further from thinking about “how do I build this?”, to “what do I want?”. Finally you would only think about your use case or customer and receive the most customizable solution imaginable. Off-the-shelf software becomes a relic of the past. If you can think of a feature, you will get it. We are already seeing this change with a drastic decrease in time it takes to go from scratch to MVP (v0, Lovable, Cursor) and we should expect this to further drop once these software agents are powered by smarter reasoning models in 2025.

Bottlenecks

Predicting what will happen once software becomes a commodity and intelligence flows like alternating current is no easy task. However we can be somewhat certain that some constraining variables will remain the same, at least in the medium term: Data, Regulation, Compute.

Data

You could hire the ten best meteorologists on the planet to make a prediction about tomorrow's weather. But if you don't give them access to data on temperature, humidity, wind patterns, and satellite images, they will inevitably do a lousy job. The same holds true for integrating AI into your business. If you don't link it up to your existing data sources, or even worse, you don't collect the data in the first place, even your 300 IQ reasoning model will do a lousy job.

In contrast to the pre-training times where just scraping every last piece of text on the internet was sufficient, with this new paradigm scaling is based on a different kind of data: Chain-of-thought (CoT). CoT (oversimplified) is training the model on step-by-step thought processes, e.g. the thoughts you might have when solving a tricky math question. Adoption of AI in business will likely be hindered by the availability of such CoT data in a specific business context. Perhaps we will observe a wave of human data annotators, where instead of labelling pictures or social media posts, humans are tasked with recording their thought processes while creating a PowerPoint on corporate strategy. Depending on how much real data is needed and how much can be done with reinforcement learning (the process used for training these reasoning models), we might even observe CEOs recording their reasoning process when making tough business decisions, or choose between two chains of thought produced by the AI in order to further enhance the model.

Regulation

Even if you can spin up a brand-new and improved HR system in a few hours, if compliance tells you that you can’t integrate any sensitive user data, intelligence won't help you here. Regulation as always is a two-sided sword: it might protect you and your company from security, privacy, or business risk, but it might also drastically hinder your ability to implement new solutions. This becomes especially problematic if the solution relies on a black-box AI, more intelligent than you, potentially deceptive, and likely serving its creator's interests over yours.

Compute

Given the stock price of Nvidia, it seems like many people have understood this. Intelligence requires hardware and it requires energy. For the case of reasoning models, many naysayers quickly downplayed the unprecedented results of o3 with “But this would be way too expensive if every query costs you thousands of dollars”. What is somewhat overlooked is that with this new paradigm of reasoning models we actually taught the model “Thinking, fast and slow”. Just as in Kahneman’s book AI is now able to think fast (next-word prediction - GPT4, Claude 3.5) and slow (CoT reasoning - o1, o3). It will take some time for the AI to figure out when it needs to apply which type of thinking, but this shouldn’t distract us from the underlying paradigm shift. Similar to the price drops we saw with earlier models, we should expect reasoning models to either be or quickly become competitive with human developers in terms of cost.

The speed of scale for both energy and compute is luckily pretty transparent. Big Tech is constantly in headlines about buying land and reactivating power plants, while Nvidia just announced their new superchip.

Microsoft, you won.

I could have titled the entire article “Microsoft, you won.” but I will just keep it to this paragraph. If we think about the above bottlenecks, it seems like Microsoft is in a more than ideal position. Not only is Microsoft “below them, above them, around them [OpenAI]” as Satya Nadella beautifully put it during OpenAI’s meltdown of late 2023, before stating further “we have everything” in regards to their data and capabilities, but they also recently lifted their for-profit cap with a new definition, that could allow Microsoft to make hundreds of billions if not trillions off of OpenAI’s work before it is supposedly “going to benefit all of humanity”.

However, much more importantly than just owning the oil fields, i.e. controlling OpenAI, they are the most commonly implemented business software suite for any business on the planet. Every business already uses their products (MS 365), most of them already trust Microsoft with their data (Onedrive), and Microsoft already provides a staggering amount of them with compute (Azure). If software becomes a commodity and you as a business want to implement some super customizable & agentic solution for your business, why even bother looking for a specialized vendor? Chances are high that you will go with the provider that you already have a subscription with, that already hosts your data (especially all of the teams/powerpoint data on your business workflows and strategy), and lastly the provider that you know meets all of the required compliance standards. Lastly, if that was not enough, Microsoft just announced to invest another $80bn in data centers, effectively enabling them to control the entire value chain without having to split any margin. So yes, SaaS is dead, and Satya killed it.

We should expect a massive market consolidation and while we currently live in a time where human intelligence still buys you lunch, with new startups spinning up solutions within weeks and getting millions of users overnight, we should expect a large chunk of the currently fragmented SaaS cake to be eaten up by Microsoft (& OpenAI), Google (& Deepmind), and Amazon (& Anthropic) once creating software doesn’t require any special skills anymore.

Commoditization of knowledge work

Software & SaaS right now is a good example of what the future might hold for other sectors. To think about it I like to ask the question “What percentage of your job is not a computer input?”. If currently your comparable advantage over AI is that you can switch windows on your desktop to copy & paste the LLM output into a different program, you might not want to become too comfortable in your job.

Unlocking new modalities

Even if your job is more than just moving pixels on a computer, other modalities such as voice, video, robotics are either already here or on the horizon.

Voice has largely been unlocked with the release of the OpenAI real-time API and players like Elevenlabs. Consequently, increasing adoption and substitution of customer support or sales functions seem to be underway [source, source]. However, even if intelligence is fully there and the AI completely understands the user's request, data limitations might slow down adoption. If your AI voice agent doesn’t know more than your FAQ page and lacks information on edge cases, internal policies, or previous customer interactions, you can expect that customers will still insist on speaking with a real person. In addition to intelligence and data, a third ingredient is necessary for widespread voice adoption: it needs to feel real. Coming from a world where human-to-human conversation is the norm, and where voice assistants have disappointed for decades, you need to convince people that it’s worthwhile to talk to a machine. The best way to do so is if they don’t realize they are speaking to one.

Video models such as Sora and Veo have been published within the last few weeks, and although these models are fun to play around with and will likely get much better and cheaper, I imagine their effect will be limited to parts of the marketing, media, and content creation professions. A bigger opportunity in the context of video might be interactive video avatars. Just as we prefer Zoom over phone calls, we will likely see interactive AI avatars you can jump on a Zoom call with across various domains. One use case for interactive video avatars I would like to highlight is personalized AI tutoring, something I’ve been involved with at Reeview.ai. Our goal is to create personalized online tutors that identify exactly where you’re stuck in your learning journey and hold you accountable for making progress, using human-like avatars that feel just like interacting with a real online tutor.

Revisiting the question, “What percentage of your job is not a computer input?”, these new modalities might further challenge humanity's current pole position in the business world.

The end of consulting

In consulting you are selling skilled labour. If we assume that intelligence becomes abundant and knowledge work gets largely commoditized over the coming years, it's not looking too bright for consultancies. Especially mainstream IT consultants might currently be eating their last supper. Accenture has been one of the big winners of AI with over $3.7B in bookings related to GenAI. However, while helping businesses to leverage and automate with AI, they are reducing the amount of projects they could be involved in going forward as IT consultants. Again, highly specialized employees and those with a wealth of domain expertise might stick around for longer, but ultimately, if you are charging by the hour in sectors that could see human labour in free fall, tough times might be ahead. Even worse off are nations whose main source of income is exporting knowledge work. Take as an example India, whose GDP is composed of approximately 7.5% from IT- Business Process Management.

The broader future ahead

In the short-term, AI will help workforces to become more efficient. In the medium term, it will replace many humans in domains prone for innovation and under high competitive pressure. In the long run, once enough data has been collected, compute costs have decreased, and compliance issues have been resolved, AI might turn the vast majority of knowledge workers into the lamplighters and steam engine operators of the 21st century.

Even if distribution shifts and robustness continues to be an issue for the next 5 or even 10 years (Waymo/Google took ~13 years from their first prototype to publicly available autonomous vehicles), we are about to enter a future no one (except maybe Big Tech) seems truly prepared for. In this future, human capital, like intelligence, becomes commoditized, and in turn, financial and social capital will likely increase in value significantly. After all, it is the investors who own the value-producing machines.

Many futures seem plausible given the anticipated rapid increase in capacity to produce goods and services. One path could lead to a dystopian hyper-capitalistic society where wealth concentrates in the hands of those owning AI-enabling companies, while even skilled workers and entrepreneurs can't build wealth anymore. The only path to changing class would be through fame or social status and monetizing one's personal brand, while the majority "unemployable" class would be kept just comfortable enough to prevent revolt. Meanwhile, media controlled by the wealthy would keep the population distracted with popularizing and emotional topics, drawing attention away from the massive wealth consolidation taking place.

Another path opens up if we get the governance right. Strong policy frameworks could prevent extreme wealth concentration and ensure those displaced by AI don't sink into poverty. A creative solution would be to overhaul our educational system. Instead of rushing kids through school to join a workforce that no longer needs them, people would stay in a new reformed education system much longer, throughout their adult lives. The goal wouldn't be creating workers, but developing people who enrich society through culture, arts and community engagement while providing them with purpose beyond monetary goals.

Just as the lamplighters of Paris gave way to the electric revolution, we stand at a similar inflection point where human knowledge work may soon be transformed by reasoning AI. It is on us to shape this future and ensure that AGI truly benefits all of humanity.

The above was influenced by many great posts and ideas, some main ones:

**By default, capital will matter more than ever after AGI** by Rudolf L

**The Intelligence Curse** by Luke Drago

OpenAI Researcher Noam Brown Unpacks the Full Release of o1 and the Path to AGI - Unsupervised Learning by Redpoint Ventures

Thanks to Jacques and Rocket for proof reading drafts of this post.